What is a Module in JavaScript?

In simple terms, a module is a piece of reusable JavaScript code. It could be a .js file or a directory containing .js files. You can export the content of these files and use them in other files.

Module in Node.js is a simple or complex functionality organized in single or multiple JavaScript files which can be reused throughout the Node.js application.

Each module in Node.js has its own context, so it cannot interfere with other modules or pollute global scope. Also, each module can be placed in a separate .js file under a separate folder.

What is Node.js?

Node.js is an open-source framework for building fast and scalable server-side JavaScript applications. NodeJS uses an event-driven, non-blocking I/O model that makes it lightweight and efficient.The NodeJS process model can be explained with three architectural features of NodeJS.

Single-threaded: In Node.js all requests are single-threaded and collected in an event loop. The event loop is what allows Node.js to perform all non-blocking operations. This means that everything from receiving the request to performing the tasks to sending the response to the client is executed in a single thread. This feature prevents reloading and reduces context switching time.

Highly Scalable: Node.js applications are highly scalable because they run asynchronously. Node.js can efficiently handle concurrent requests while balancing all active CPU cores. This feature of Node.js is very beneficial for developers.

Cross-Platform Compatibility: Node.js can be used on a wide variety of systems, from Windows to Mac OS, Linux, and even mobile platforms.

JavaScript: Most developers already have a good understanding of JavaScript, how it works, and other basic and advanced concepts related to it. Node.js allows developers to use JavaScript for backend development. This is convenient because developers don't have to switch between multiple programming languages and can make full-stack projects by only knowing JavaScript.

Fast Data Streaming: Node.js is built on Google Chrome's V8 JavaScript engine, which makes your code run faster. The engine compiles JavaScript code into machine code. This allows Node.js to run significantly faster and provides fast data flow for web applications. Concepts like asynchronous programming and how it works with non-blocking I/O operations make Node.js efficient.

No buffering: Node.js works with data streams, which are aggregated data. Therefore, the user can get the data more easily and quickly because there is no need to wait for the entire operation to complete. It reduces the overall time required for processing. Because of this, there is little or no data buffering with Node.js.

Asynchronous: Node.js is asynchronous by default i.e. that a server built using Node.js does not need to wait for the date from an API. In other words, Node.js works in a non-blocking way, that does not block the execution of any further operation. Asynchronous and non-blocking I/O improves both response time and user experience.

This feature means that if a Node receives a request for some Input/Output operation, it will execute that operation in the background and continue with the processing of other requests. Thus it will not wait for the response from the previous requests.

What is Synchronous Code?

When we write a program in JavaScript, it executes line by line. When a line is completely executed, then and then only does the code move forward to execute the next line.

What is Asynchronous Code?

With asynchronous code, multiple tasks can execute at the same time while tasks in the background finish. This is what we call non-blocking code. The execution of other code won't stop while an asynchronous task finishes its work.

Event-driven: The concept of event-driven is similar to the concept of callback functions in asynchronous programming. In Node.js, callback functions, also known as event handlers, are executed when an event is triggered or completed. Callback functions require fewer resources on the server side and consume less memory. This feature of Node.js makes the application lightweight.

What is NPM?

NPM (Node Package Manager) is a package manager for Node.js that allows developers to easily install, manage, and share packages of code.

How to create a simple server in Node.js that returns Hello World?

/**

* Express.js

*/

const express = require('express');

const app = express();

app.get('/', function (req, res) {

res.send('Hello World!');

});

app.listen(3000, function () {

console.log('App listening on port 3000!');

});

Explain the concept of URL module in Node.js?

The URL module splits up a web address into readable parts.To include the URL module, use the require() method:var url = require('url');

Parse an address with the url.parse() method, and it will return a URL object with each part of the address as properties:

ExampleGet your own Node.js Server

Split a web address into readable parts:

var url = require('url');

var adr = 'http://localhost:8080/default.htm?year=2017&month=february';

var q = url.parse(adr, true);

console.log(q.host); //returns 'localhost:8080'

console.log(q.pathname); //returns '/default.htm'

console.log(q.search); //returns '?year=2017&month=february'

var qdata = q.query; //returns an object: { year: 2017, month: 'february' }

console.log(qdata.month); //returns 'february'

ts What are the data types in Node.js?

Just like JS, there are two categories of data types in Node: Primitives and Objects.

1. Primitives:

- String

- Number

- BigInt

- Boolean

- Undefined

- Null

- Symbol

2. Objects:

- Function

- Array

- Buffer

Explain String data type in Node.js?

Strings in Node.js are sequences of unicode characters. Strings can be wrapped in a single or double quotation marks. Javascript provide many functions to operate on string, like indexOf(), split(), substr(), length.

String functions:

Function Description charAt() It is useful to find a specific character present in a string. concat() It is useful to concat more than one string. indexOf() It is useful to get the index of a specified character or a part of the string. match() It is useful to match multiple strings. split() It is useful to split the string and return an array of string. join() It is useful to join the array of strings and those are separated by comma (,) operator.

Example:

/**

* String Data Type

*/

const str1 = "Hello";

const str2 = 'World';

console.log("Concat Using (+) :" , (str1 + ' ' + str2));

console.log("Concat Using Function :" , (str1.concat(str2)));

Explain Number data type in Node.js?

The number data type in Node.js is 64 bits floating point number both positive and negative. The parseInt() and parseFloat() functions are used to convert to number, if it fails to convert into a number then it returns NaN.

Example:

/**

* Number Data Type

*/

// Example 01:

const num1 = 10;

const num2 = 20;

console.log(`sum: ${num1 + num2}`);

// Example 02:

console.log(parseInt("32")); // 32

console.log(parseFloat("8.24")); // 8.24

console.log(parseInt("234.12345")); // 234

console.log(parseFloat("10")); // 10

// Example 03:

console.log(isFinite(10/5)); // true

console.log(isFinite(10/0)); // false

// Example 04:

console.log(5 / 0); // Infinity

console.log(-5 / 0); // -Infinity

Explain Boolean data type in Node.js?

Boolean data type is a data type that has one of two possible values, either true or false. In programming, it is used in logical representation or to control program structure.

The boolean() function is used to convert any data type to a boolean value. According to the rules, false, 0, NaN, null, undefined, empty string evaluate to false and other values evaluates to true.

Example:

/**

* Boolean Data Type

*/

// Example 01:

const isValid = true;

console.log(isValid); // true

// Example 02:

console.log(true && true); // true

console.log(true && false); // false

console.log(true || false); // true

console.log(false || false); // false

console.log(!true); // false

conso

Explain Undefined and Null data type in Node.js?

In node.js, if a variable is defined without assigning any value, then that will take undefined as value. If we assign a null value to the variable, then the value of the variable becomes null.

Example:

/**

* NULL and UNDEFINED Data Type

*/

let x;

console.log(x); // undefined

let y = null;

console.log(y); // null

Q. Explain Symbol data type in Node.js?

Symbol is an immutable primitive value that is unique. It's a very peculiar data type. Once you create a symbol, its value is kept private and for internal use.

Example:

/**

* Symbol Data Type

*/

const NAME = Symbol()

const person = {

[NAME]: 'Ritika Bhavsar'

}

person[NAME] // 'Ritika Bhavsar'

Q. Explain function in Node.js?

Functions are first class citizens in Node's JavaScript, similar to the browser's JavaScript. A function can have attributes and properties also. It can be treated like a class in JavaScript.

Example:

/**

* Function in Node.js

*/

function Messsage(name) {

console.log("Hello "+name);

}

Messsage("World"); // Hello Worldle.log(!false); // true

Q. Explain Buffer data type in Node.js?

Node.js includes an additional data type called Buffer ( not available in browser's JavaScript ). Buffer is mainly used to store binary data, while reading from a file or receiving packets over the network.

Example:

/**

* Buffer Data Type

*/

let b = new Buffer(10000);

let str = "----------";

b.write(str);

console.log( str.length ); // 10

console.log( b.length ); // 10000

Note: Buffer() is deprecated due to security and usability issues.

What are the core modules of Node.js?

Node.js has a set of core modules that are part of the platform and come with the Node.js installation. These modules can be loaded into the program by using the require function.

Syntax:

const module = require('module_name');

Example:

const http = require('http');

http.createServer(function (req, res) {

res.writeHead(200, {'Content-Type': 'text/html'});

res.write('Welcome to Node.js!');

res.end();

}).listen(3000);

What are the core modules of Node.js?

Node.js has a set of core modules that are part of the platform and come with the Node.js installation. These modules can be loaded into the program by using the require function.

Syntax:

const module = require('module_name');

Example:

const http = require('http');

http.createServer(function (req, res) {

res.writeHead(200, {'Content-Type': 'text/html'});

res.write('Welcome to Node.js!');

res.end();

}).listen(3000);

The following table lists some of the important core modules in Node.js.

Name Description Assert It is used by Node.js for testing itself. It can be accessed with require('assert'). Buffer It is used to perform operations on raw bytes of data which reside in memory. It can be accessed with require('buffer') Child Process It is used by node.js for managing child processes. It can be accessed with require('child_process'). Cluster This module is used by Node.js to take advantage of multi-core systems, so that it can handle more load. It can be accessed with require('cluster'). Console It is used to write data to console. Node.js has a Console object which contains functions to write data to console. It can be accessed with require('console'). Crypto It is used to support cryptography for encryption and decryption. It can be accessed with require('crypto'). HTTP It includes classes, methods and events to create Node.js http server. URL It includes methods for URL resolution and parsing. Query String It includes methods to deal with query string. Path It includes methods to deal with file paths. File System It includes classes, methods, and events to work with file I/O. Util It includes utility functions useful for programmers. Zlib It is used to compress and decompress data. It can be accessed with require('zlib').

What are the global objects of Node.js?

Node.js Global Objects are the objects that are available in all modules. Global Objects are built-in objects that are part of the JavaScript and can be used directly in the application without importing any particular module.

These objects are modules, functions, strings and object itself as explained below.

1. global:

It is a global namespace. Defining a variable within this namespace makes it globally accessible.

var myvar;

2. process:

It is an inbuilt global object that is an instance of EventEmitter used to get information on current process. It can also be accessed using require() explicitly.

3. console:

It is an inbuilt global object used to print to stdout and stderr.

console.log("Hello World"); // Hello World

4. setTimeout(), clearTimeout(), setInterval(), clearInterval():

The built-in timer functions are globals

function printHello() {

console.log( "Hello, World!");

}

// Now call above function after 2 seconds

var timeoutObj = setTimeout(printHello, 2000);

5. __dirname:

It is a string. It specifies the name of the directory that currently contains the code.

console.log(__dirname);

6. __filename:

It specifies the filename of the code being executed. This is the resolved absolute path of this code file. The value inside a module is the path to that module file.

console.log(__filename);

What is Chrome V8?

Chrome V8 is a JavaScript engine, which means that it executes JavaScript code. Originally, JavaScript was written to be executed by web browsers. Chrome V8, or just V8, can execute JavaScript code either within or outside of a browser, which makes server-side scripting possible.

Like a V8 (eight-cylinder) car engine, Chrome V8 is fast and powerful. V8 translates JavaScript code directly into machine code* so that computers can actually understand it, then it executes the translated, or compiled, code. V8 optimizes JavaScript execution as well.

*Machine code is a language that CPUs can understand. It is purely digital, meaning made up of digits.

Q. How V8 compiles JavaScript code?Compilation is the process of converting human-readable code to machine code. There are two ways to compile the code

- Using an Interpreter: The interpreter scans the code line by line and converts it into byte code.

- Using a Compiler: The Compiler scans the entire document and compiles it into highly optimized byte code.

The V8 engine uses both a compiler and an interpreter and follows just-in-time (JIT) compilation to speed up the execution. JIT compiling works by compiling small portions of code that are just about to be executed. This prevents long compilation time and the code being compiles is only that which is highly likely to run.

What is EventEmitter in Node.js?

https://nodejs.dev/en/learn/the-nodejs-event-emitter/

If you worked with JavaScript in the browser, you know how much of the interaction of the user is handled through events: mouse clicks, keyboard button presses, reacting to mouse movements, and so on.

On the backend side, Node.js offers us the option to build a similar system using the events module.

This module, in particular, offers the EventEmitter class, which we'll use to handle our events.

You initialize that using

const EventEmitter = require('events');

const eventEmitter = new EventEmitter();

This object exposes, among many others, the on and emit methods.

emit is used to trigger an eventon is used to add a callback function that's going to be executed when the event is triggered

For example, let's create a start event, and as a matter of providing a sample, we react to that by just logging to the console:

eventEmitter.on('start', () => {

console.log('started');

});

When we run

eventEmitter.emit('start');

the event handler function is triggered, and we get the console log.

You can pass arguments to the event handler by passing them as additional arguments to emit():

eventEmitter.on('start', number => {

console.log(`started ${number}`);

});

eventEmitter.emit('start', 23);

Multiple arguments:

eventEmitter.on('start', (start, end) => {

console.log(`started from ${start} to ${end}`);

});

eventEmitter.emit('start', 1, 100);

The EventEmitter object also exposes several other methods to interact with events, like

once(): add a one-time listenerremoveListener() / off(): remove an event listener from an eventremoveAllListeners(): remove all listeners for an event

You can read more about these methods in the official documentation.

What is EventEmitter in Node.js?

The EventEmitter is a class that facilitates communication/interaction between objects in Node.js. The EventEmitter class can be used to create and handle custom events.

EventEmitter is at the core of Node asynchronous event-driven architecture. Many of Node's built-in modules inherit from EventEmitter including prominent frameworks like Express.js. An emitter object basically has two main features:

- Emitting name events.

- Registering and unregistering listener functions.

Example:

/**

* Callback Events with Parameters

*/

const events = require('events');

const eventEmitter = new events.EventEmitter();

function listener(code, msg) {

console.log(`status ${code} and ${msg}`);

}

eventEmitter.on('status', listener); // Register listener

eventEmitter.emit('status', 200, 'ok');

// Output

status 200 and ok

Q. How does the EventEmitter works in Node.js?

- Event Emitter emits the data in an event called message

- A Listened is registered on the event message

- when the message event emits some data, the listener will get the data

Building Blocks: https://nodejs.dev/en/api/v19/events/

- .emit() - this method in event emitter is to emit an event in module

- .on() - this method is to listen to data on a registered event in node.js

- .once() - it listen to data on a registered event only once.

- .addListener() - it checks if the listener is registered for an event.

- .removeListener() - it removes the listener for an event.

Example 01:

/**

* Callbacks Events

*/

const events = require('events');

const eventEmitter = new events.EventEmitter();

function listenerOne() {

console.log('First Listener Executed');

}

function listenerTwo() {

console.log('Second Listener Executed');

}

eventEmitter.on('listenerOne', listenerOne); // Register for listenerOne

eventEmitter.on('listenerOne', listenerTwo); // Register for listenerOne

// When the event "listenerOne" is emitted, both the above callbacks should be invoked.

eventEmitter.emit('listenerOne');

// Output

First Listener Executed

Second Listener Executed

Example 02:

/**

* Emit Events Once

*/

const events = require('events');

const eventEmitter = new events.EventEmitter();

function listenerOnce() {

console.log('listenerOnce fired once');

}

eventEmitter.once('listenerOne', listenerOnce); // Register listenerOnce

eventEmitter.emit('listenerOne');

// Output

listenerOnce fired once

What are the EventEmitter methods available in Node.js?

EventEmitter Methods Description .addListener(event, listener) Adds a listener to the end of the listeners array for the specified event. .on(event, listener) Adds a listener to the end of the listeners array for the specified event. It can also be called as an alias of emitter.addListener() .once(event, listener) This listener is invoked only the next time the event is fired, after which it is removed. .removeListener(event, listener) Removes a listener from the listener array for the specified event. .removeAllListeners([event]) Removes all listeners, or those of the specified event. .setMaxListeners(n) By default EventEmitters will print a warning if more than 10 listeners are added for a particular event. .getMaxListeners() Returns the current maximum listener value for the emitter which is either set by emitter.setMaxListeners(n) or defaults to EventEmitter.defaultMaxListeners. .listeners(event) Returns a copy of the array of listeners for the specified event. .emit(event[, arg1][, arg2][, ...]) Raise the specified events with the supplied arguments. .listenerCount(type) Returns the number of listeners listening to the type of event.

How the Event Loop Works in Node.js?

The event loop allows Node.js to perform non-blocking I/O operations despite the fact that JavaScript is single-threaded. It is done by offloading operations to the system kernel whenever possible.

Event Loop plays a major role in the execution of asynchronous functions The event loop plays a crucial role in scheduling which operations our only thread should be performing at any given point in time. Hence the event loop helps us to understand Node's asynchronous processes and its non-blocking I/O nature.

Node.js is a single-threaded application, but it can support concurrency via the concept of event and callbacks. Every API of Node.js is asynchronous and being single-threaded, they use async function calls to maintain concurrency. Node uses observer pattern. Node thread keeps an event loop and whenever a task gets completed, it fires the corresponding event which signals the event-listener function to execute.

Features of Event Loop:

- Event loop is an endless loop, which waits for tasks, executes them and then sleeps until it receives more tasks.

- The event loop executes tasks from the event queue only when the call stack is empty i.e. there is no ongoing task.

- The event loop allows us to use callbacks and promises.

- The event loop executes the tasks starting from the oldest first.

Example:

/**

* Event loop in Node.js

*/

const events = require('events');

const eventEmitter = new events.EventEmitter();

// Create an event handler as follows

const connectHandler = function connected() {

console.log('connection succesful.');

eventEmitter.emit('data_received');

}

// Bind the connection event with the handler

eventEmitter.on('connection', connectHandler);

// Bind the data_received event with the anonymous function

eventEmitter.on('data_received', function() {

console.log('data received succesfully.');

});

// Fire the connection event

eventEmitter.emit('connection');

console.log("Program Ended.");

// Output

Connection succesful.

Data received succesfully.

Program Ended.

How are event listeners created in Node.JS?

An array containing all eventListeners is maintained by Node. Each time .on() function is executed, a new event listener is added to that array. When the concerned event is emitted, each eventListener that is present in the array is called in a sequential or synchronous manner.

The event listeners are called in a synchronous manner to avoid logical errors, race conditions etc. The total number of listeners that can be registered for a particular event, is controlled by .setMaxListeners(n). The default number of listeners is 10.

emitter.setMaxlisteners(12);

The Event Loop - A Quick Primer

Node.js is a single-threaded, event-driven platform that can run non-blocking, asynchronous code. These Node.js features make it memory efficient. Even though JavaScript is single-threaded, the event loop enables Node.js to perform non-blocking I/O operations. It is accomplished by delegating tasks to the operating system whenever and wherever possible.

Because most operating systems are multi-threaded, they can handle multiple operations that are running in the background. When one of these operations is finished, the kernel notifies Node.js, and the callback associated with that operation is added to the event queue, where it will eventually be executed.

Features of Event Loop:

- An event loop is an infinite loop that waits for tasks, executes them, and then sleeps until more tasks are received.

- When the call stack is empty, i.e., there are no ongoing tasks, the event loop executes tasks from the event queue.

- We can use callbacks and promises in the event loop.

- The event loop executes the tasks in reverse order, beginning with the oldest.

Example:

console.log("One");

setTimeout(function(){

console.log("Two");

}, 1000);

console.log("Three");Output:

One

Three

Two

The first console log statement is pushed to the call stack in the above example, and "One" is logged on the console before the task is popped from the stack. Following that, the setTimeout is added to the queue, the task is sent to the operating system, and the task's timer is set. After that, this task is removed from the stack. The third console log statement is then pushed to the call stack, "Three" is logged on the console, and the task is removed from the stack.

Timers in JavaScript

A timer is used in JavaScript to execute a task or function at a specific time. The timer is essentially used to delay the execution of the program or to execute the JavaScript code at regular intervals. You can delay the execution of the code by using a timer. As a result, when an event occurs or a page loads, the code does not complete its execution at the same time.

Advertisement banners on websites, which change every 2-3 seconds, are the best example of a timer. These advertising banners are rotated at regular intervals on websites such as Flipkart. To change them, you set a time interval.

JavaScript provides two timer functions, setInterval() and setTimeout(), which help to delay code execution and allow one or more operations to be performed repeatedly.

setTimeout():

The setTimeout() function allows users to postpone the execution of code. The setTimeout() method accepts two parameters, one of which is a user-defined function, and the other is a time parameter to delay execution. The time parameter, which is optional to pass, stores the time in milliseconds (1 second = 1000 milliseconds).

setInterval():

The setInterval method is similar to the setTimeout() function in some ways. It repeats the specified function after a time interval. Alternatively, you can say that a function is executed repeatedly after a certain amount of time specified by the user in this function.

Timers in Node.js - setTimeout()

https://www.knowledgehut.com/blog/web-development/how-to-use-timers-in-nodejssetTimeout() can be used to execute code after a specified number of milliseconds. This function is equivalent to window. setTimeout() from the browser JavaScript API, but no code string can be passed to be executed.

setTimeout() takes a function to execute as the first argument and a millisecond delay defined as a number as the second. Additional arguments may be provided, and these will be passed to the function. As an example, consider the following:

Using setTimeout()

The timeout interval is not guaranteed to execute after that exact number of milliseconds. This is because any other code that blocks or holds onto the event loop will delay the execution of the timeout. The only guarantee is that the timeout will not be executed sooner than the timeout interval specified.

setTimeout(function A() {

return console.log('Hello World!');

}, 2000);

console.log('Executed before A');

clearTimeout():

The clearTimeout() method deactivates a timer that was previously set with the setTimeout() method.

The ID value returned by setTimeout() is passed to the clearTimeout() method as a parameter.

Syntax:

clearTimeout(id_of_settimeout)

Example:

function welcome () {

console.log("Welcome to Knowledgehut!");

}

var id1 = setTimeout(welcome,1000);

var id2 = setInterval(welcome,1000);

clearTimeout(id1);

Timers in Node.js - setImmediate()

To execute code at the end of the loop cycle, use the setImmediate() method. In layman's terms, this method divides tasks that take longer to complete, in order to run a callback function that is triggered by other operations such as events.

Syntax:

let immediateId = setImmediate(callbackFunction, [param1, param2, ...]);

let immediateId = setImmediate(callbackFunction);

The function to be executed will be the first argument to setImmediate(). When the function is executed, any additional arguments will be passed to it.

Now consider the difference between setImmediate() and process. nextTick(), as well as when to use which.

While processing, setImmediate() is executed in the Check handlers phase. process.nextTick() is called at the start of the event loop and at the end of each phase.

process.nextTick() has higher priority than setImmediate():

setImmediate(() => console.log('I run immediately'))

process.nextTick(() => console.log('But I run before that'))Output:

Using setImmediate()

Multiple setImmediate functions are called in the following example. When you do this, the callback functions are queued for execution in the order in which they are created. After each event loop iteration, the entire callback queue is processed. If an immediate timer is queued from within an executing callback, it will not be triggered until the next iteration of the event loop. To kick-start your web development career, enroll in the best Online Courses for Web Development.

Example:

setImmediate(function A() {

setImmediate(function B() {

console.log(1);

setImmediate(function D() {

console.log(2);

});

});

setImmediate(function C() {

console.log(3);

setImmediate(function E() {

console.log(4);

});

});

});

console.log('Started');

clearImmediate():

The clearImmediate function is used to remove the function call that was scheduled by the setImmediate function. Both of these functions can be found in Node.js's Timers module.

Example:

console.log("Before the setImmediate call")

let timerID = setImmediate(() => {console.log("Hello, World")});

console.log("After the setImmediate call")

clearImmediate(timerID);

Timers in Node.js - setInterval()

This method, unlike setTimeout(), is used to execute code multiple times. For example, the company may send out weekly newsletters to its Edge as a Service customer. This is where the setInterval() method comes into play. It is an infinite loop that will continue to execute as long as it is not terminated (or halted).

As the second argument, setInterval() accepts a function argument that will run an infinite number of times with a given millisecond delay. In the same way that setTimeout() accepts additional arguments beyond the delay, these will be passed on to the function call. The delay, like setTimeout(), cannot be guaranteed due to operations that may stay in the event loop and should thus be treated as an approximation.

Syntax:

let intervalId = setInterval(callbackFunction, [delay, argument1, argument2, ...]); //option 1

let intervalId = setInterval(callbackFunction[, delayDuration]); // option 2

let intervalId = setInterval(code, [delayDuration]); //option 3

Using setInterval()

Example:

setInterval(function A() {

return console.log('Hello World!');

}, 1000);

// Executed right away

console.log('Executed before A');

setInterval(), like setTimeout() returns a Timeout object that can be used to reference and modify the interval that was set.

In the above example, function A() will execute after every 1000 milliseconds.

clearInterval():

Example:

var si = setInterval(function A() {

return console.log("Hello World!");

}, 1000);

setTimeout(function() {

clearInterval(si);

}, 4000);

Using Timer.unref()

The timer module is used to schedule functions that will be called later. Because it is a global API, there is no need to import (require("timers")) to use it.

The Timeout Class contains an object (setTimeout()/setInterval()) that is created internally to schedule actions, and (clearTimeout()/clearInterval()) that can be passed to cancel those scheduled actions. When a timeout is set, the Node.js event loop will continue to run until clearTimeout() is called. The setTimeout() method returns timeout objects that can be used to control this default behaviour, and it exports both the timeout.ref() and timeout.unref() functions.

timeout.ref():

When the Timeout is active and (timeout.ref()) is called, it requests that the Node.js event loop not exit for an extended period of time. In any case, calling this Method multiple times has no effect.

Syntax:

timeout.ref()

timeout.unref():

When the Timeout is enabled, the Node.js event loop is not required to remain active. If any other activity keeps the event loop running, the Timeout object's callback is invoked after the process exits. In any case, calling this Method multiple times has no effect.

Syntax:

timeout.unref()

Example:

var Timeout = setTimeout(function alfa() {

console.log("0.> Setting Timeout", 12);

});

console.log("1 =>", Timeout.ref());

Timeout.unref()

Timeout.ref()

console.log("2 =>", Timeout.unref());

clearTimeout(Timeout);

console.log("3 => Printing after clearing Timeout"); Output:

What is the difference between process.nextTick() and setImmediate()?

1. process.nextTick():

The process.nextTick() method adds the callback function to the start of the next event queue. It is to be noted that, at the start of the program process.nextTick() method is called for the first time before the event loop is processed.

2. setImmediate():

The setImmediate() method is used to execute a function right after the current event loop finishes. It is callback function is placed in the check phase of the next event queue.

Example:

/**

* setImmediate() and process.nextTick()

*/

setImmediate(() => {

console.log("1st Immediate");

});

setImmediate(() => {

console.log("2nd Immediate");

});

process.nextTick(() => {

console.log("1st Process");

});

process.nextTick(() => {

console.log("2nd Process");

});

// First event queue ends here

console.log("Program Started");

// Output

Program Started

1st Process

2nd Process

1st Immediate

2nd Immediate

What is callback function in Node.js?

A callback is a function which is called when a task is completed, thus helps in preventing any kind of blocking and a callback function allows other code to run in the meantime.

Callback is called when task get completed and is asynchronous equivalent for a function. Using Callback concept, Node.js can process a large number of requests without waiting for any function to return the result which makes Node.js highly scalable.

What is a Callback?

A callback function is a simple javascript function that is passed as an argument to another function and is executed when the other function has completed its execution. In layman's terms, a callback is generally used as a parameter to another function. Callbacks in Node.js are so common that you probably used callbacks yourself without understanding that they are called callbacks.

Example:

/**

* Callback Function

*/

function message(name, callback) {

console.log("Hi" + " " + name);

callback();

}

// Callback function

function callMe() {

console.log("I am callback function");

}

// Passing function as an argument

message("Node.JS", callMe);

Output:

Hi Node.JS

I am callback function

What are the difference between Events and Callbacks?

1. Events:

Node.js events module which emits named events that can cause corresponding functions or callbacks to be called. Functions ( callbacks ) listen or subscribe to a particular event to occur and when that event triggers, all the callbacks subscribed to that event are fired one by one in order to which they were registered.

All objects that emit events are instances of the EventEmitter class. The event can be emitted or listen to an event with the help of EventEmitter

Example:

/**

* Events Module

*/

const event = require('events');

const eventEmitter = new event.EventEmitter();

// add listener function for Sum event

eventEmitter.on('Sum', function(num1, num2) {

console.log('Total: ' + (num1 + num2));

});

// call event

eventEmitter.emit('Sum', 10, 20);

// Output

Total: 30

2. Callbacks:

A callback function is a function passed into another function as an argument, which is then invoked inside the outer function to complete some kind of routine or action.

Example:

/**

* Callbacks

*/

function sum(number) {

console.log('Total: ' + number);

}

function calculator(num1, num2, callback) {

let total = num1 + num2;

callback(total);

}

calculator(10, 20, sum);

// Output

Total: 30

Callback functions are called when an asynchronous function returns its result, whereas event handling works on the observer pattern. The functions that listen to events act as Observers. Whenever an event gets fired, its listener function starts executing. Node.js has multiple in-built events available through events module and EventEmitter class which are used to bind events and event-listeners

What is callback hell in Node.js?

The callback hell contains complex nested callbacks. Here, every callback takes an argument that is a result of the previous callbacks. In this way, the code structure looks like a pyramid, making it difficult to read and maintain. Also, if there is an error in one function, then all other functions get affected.

An asynchronous function is one where some external activity must complete before a result can be processed; it is "asynchronous" in the sense that there is an unpredictable amount of time before a result becomes available. Such functions require a callback function to handle errors and process the result.

Example:

/**

* Callback Hell

*/

firstFunction(function (a) {

secondFunction(a, function (b) {

thirdFunction(b, function (c) {

// And so on…

});

});

});

How to avoid callback hell in Node.js?

1. Managing callbacks using Async.js:

Async is a really powerful npm module for managing asynchronous nature of JavaScript. Along with Node.js, it also works for JavaScript written for browsers.

Async provides lots of powerful utilities to work with asynchronous processes under different scenarios.

npm install --save async

2. Managing callbacks hell using promises:

Promises are alternative to callbacks while dealing with asynchronous code. Promises return the value of the result or an error exception. The core of the promises is the .then() function, which waits for the promise object to be returned.

The .then() function takes two optional functions as arguments and depending on the state of the promise only one will ever be called. The first function is called when the promise if fulfilled (A successful result). The second function is called when the promise is rejected.

Example:

/**

* Promises

*/

const myPromise = new Promise((resolve, reject) => {

setTimeout(() => {

resolve("Successful!");

}, 300);

});

3. Using Async Await:

Async await makes asynchronous code look like it's synchronous. This has only been possible because of the reintroduction of promises into node.js. Async-Await only works with functions that return a promise.

Example:

/**

* Async Await

*/

const getrandomnumber = function(){

return new Promise((resolve, reject)=>{

setTimeout(() => {

resolve(Math.floor(Math.random() * 20));

}, 1000);

});

}

const addRandomNumber = async function(){

const sum = await getrandomnumber() + await getrandomnumber();

console.log(sum);

}

addRandomNumber();

What is typically the first argument passed to a callback handler?

The first parameter of the callback is the error value. If the function hits an error, then they typically call the callback with the first parameter being an Error object.

Example:

/**

* Callback Handler

*/

const Division = (numerator, denominator, callback) => {

if (denominator === 0) {

callback(new Error('Divide by zero error!'));

} else {

callback(null, numerator / denominator);

}

};

// Function Call

Division(5, 0, (err, result) => {

if (err) {

return console.log(err.message);

}

console.log(`Result: ${result}`);

});

Q. What are the timing features of Node.js?

The Timers module in Node.js contains functions that execute code after a set period of time. Timers do not need to be imported via require(), since all the methods are available globally to emulate the browser JavaScript API.

Some of the functions provided in this module are

1. setTimeout():

This function schedules code execution after the assigned amount of time ( in milliseconds ). Only after the timeout has occurred, the code will be executed. This method returns an ID that can be used in clearTimeout() method.

Syntax:

setTimeout(callback, delay, args )

Example:

function printMessage(arg) {

console.log(`${arg}`);

}

setTimeout(printMessage, 1000, 'Display this Message after 1 seconds!');

2. setImmediate():

The setImmediate() method executes the code at the end of the current event loop cycle. The function passed in the setImmediate() argument is a function that will be executed in the next iteration of the event loop.

Syntax:

setImmediate(callback, args)

Example:

// Setting timeout for the function

setTimeout(function () {

console.log('setTimeout() function running...');

}, 500);

// Running this function immediately before any other

setImmediate(function () {

console.log('setImmediate() function running...');

});

// Directly printing the statement

console.log('Normal statement in the event loop');

// Output

// Normal statement in the event loop

// setImmediate() function running...

// setTimeout() function running...

3. setInterval():

The setInterval() method executes the code after the specified interval. The function is executed multiple times after the interval has passed. The function will keep on calling until the process is stopped externally or using code after specified time period. The clearInterval() method can be used to prevent the function from running.

Syntax:

setInterval(callback, delay, args)

Example:

setInterval(function() {

console.log('Display this Message intervals of 1 seconds!');

}, 1000);

How to implement a sleep function in Node.js?

One way to delay execution of a function in Node.js is to use async/await with promises to delay execution without callbacks function. Just put the code you want to delay in the callback. For example, below is how you can wait 1 second before executing some code.

Example:

function delay(time) {

return new Promise((resolve) => setTimeout(resolve, time));

}

async function run() {

await delay(1000);

console.log("This printed after about 1 second");

}

run();

Node.js as a File Server

The Node.js file system module allows you to work with the file system on your computer.

To include the File System module, use the require() method:

var fs = require('fs');Common use for the File System module:

- Read files

- Create files

- Update files

- Delete files

- Rename files

Read Files

The fs.readFile() method is used to read files on your computer.

Assume we have the following HTML file (located in the same folder as Node.js):

demofile1.html

<html>

<body>

<h1>My Header</h1>

<p>My paragraph.</p>

</body>

</html>Create a Node.js file that reads the HTML file, and return the content:

ExampleGet your own Node.js Server

var http = require('http');

var fs = require('fs');

http.createServer(function (req, res) {

fs.readFile('demofile1.html', function(err, data) {

res.writeHead(200, {'Content-Type': 'text/html'});

res.write(data);

return res.end();

});

}).listen(8080);Save the code above in a file called "demo_readfile.js", and initiate the file:

Initiate demo_readfile.js:

C:\Users\Your Name>node demo_readfile.jsIf you have followed the same steps on your computer, you will see the same result as the example: http://localhost:8080

ADVERTISEMENT

Create Files

The File System module has methods for creating new files:

fs.appendFile()fs.open()fs.writeFile()

The fs.appendFile() method appends specified content to a file. If the file does not exist, the file will be created:

Example

Create a new file using the appendFile() method:

var fs = require('fs');

fs.appendFile('mynewfile1.txt', 'Hello content!', function (err) {

if (err) throw err;

console.log('Saved!');

});The fs.open() method takes a "flag" as the second argument, if the flag is "w" for "writing", the specified file is opened for writing. If the file does not exist, an empty file is created:

Example

Create a new, empty file using the open() method:

var fs = require('fs');

fs.open('mynewfile2.txt', 'w', function (err, file) {

if (err) throw err;

console.log('Saved!');

});The fs.writeFile() method replaces the specified file and content if it exists. If the file does not exist, a new file, containing the specified content, will be created:

Example

Create a new file using the writeFile() method:

var fs = require('fs');

fs.writeFile('mynewfile3.txt', 'Hello content!', function (err) {

if (err) throw err;

console.log('Saved!');

});

Update Files

The File System module has methods for updating files:

fs.appendFile()fs.writeFile()

The fs.appendFile() method appends the specified content at the end of the specified file:

Example

Append "This is my text." to the end of the file "mynewfile1.txt":

var fs = require('fs');

fs.appendFile('mynewfile1.txt', ' This is my text.', function (err) {

if (err) throw err;

console.log('Updated!');

});The fs.writeFile() method replaces the specified file and content:

Example

Replace the content of the file "mynewfile3.txt":

var fs = require('fs');

fs.writeFile('mynewfile3.txt', 'This is my text', function (err) {

if (err) throw err;

console.log('Replaced!');

});

Delete Files

To delete a file with the File System module, use the fs.unlink() method.

The fs.unlink() method deletes the specified file:

Example

Delete "mynewfile2.txt":

var fs = require('fs');

fs.unlink('mynewfile2.txt', function (err) {

if (err) throw err;

console.log('File deleted!');

});

Rename Files

To rename a file with the File System module, use the fs.rename() method.

The fs.rename() method renames the specified file:

Example

Rename "mynewfile1.txt" to "myrenamedfile.txt":

var fs = require('fs');

fs.rename('mynewfile1.txt', 'myrenamedfile.txt', function (err) {

if (err) throw err;

console.log('File Renamed!');

}); How Node.js read the content of a file?

The "normal" way in Node.js is probably to read in the content of a file in a non-blocking, asynchronous way. That is, to tell Node to read in the file, and then to get a callback when the file-reading has been finished. That would allow us to handle several requests in parallel.

Common use for the File System module:

- Read files

- Create files

- Update files

- Delete files

- Rename files

Example: Read Files

<!-- index.html -->

<html>

<body>

<h1>File Header</h1>

<p>File Paragraph.</p>

</body>

</html>

/**

* read_file.js

*/

const http = require('http');

const fs = require('fs');

http.createServer(function (req, res) {

fs.readFile('index.html', function(err, data) {

res.writeHead(200, {'Content-Type': 'text/html'});

res.write(data);

res.end();

});

}).listen(3000);

How many types of streams are present in node.js?

Streams are objects that let you read data from a source or write data to a destination in continuous fashion. There are four types of streams

- Readable − Stream which is used for read operation.

- Writable − Stream which is used for write operation.

- Duplex − Stream which can be used for both read and write operation.

- Transform − A type of duplex stream where the output is computed based on input.

Each type of Stream is an EventEmitter instance and throws several events at different instance of times.

Methods:

- data − This event is fired when there is data is available to read.

- end − This event is fired when there is no more data to read.

- error − This event is fired when there is any error receiving or writing data.

- finish − This event is fired when all the data has been flushed to underlying system.

1. Reading from a Stream:

const fs = require("fs");

let data = "";

// Create a readable stream

const readerStream = fs.createReadStream("file.txt");

// Set the encoding to be utf8.

readerStream.setEncoding("UTF8");

// Handle stream events --> data, end, and error

readerStream.on("data", function (chunk) {

data += chunk;

});

readerStream.on("end", function () {

console.log(data);

});

readerStream.on("error", function (err) {

console.log(err.stack);

});

2. Writing to a Stream:

const fs = require("fs");

const data = "File writing to a stream example";

// Create a writable stream

const writerStream = fs.createWriteStream("file.txt");

// Write the data to stream with encoding to be utf8

writerStream.write(data, "UTF8");

// Mark the end of file

writerStream.end();

// Handle stream events --> finish, and error

writerStream.on("finish", function () {

console.log("Write completed.");

});

writerStream.on("error", function (err) {

console.log(err.stack);

});

3. Piping the Streams:

Piping is a mechanism where we provide the output of one stream as the input to another stream. It is normally used to get data from one stream and to pass the output of that stream to another stream. There is no limit on piping operations.

const fs = require("fs");

// Create a readable stream

const readerStream = fs.createReadStream('input.txt');

// Create a writable stream

const writerStream = fs.createWriteStream('output.txt');

// Pipe the read and write operations

// read input.txt and write data to output.txt

readerStream.pipe(writerStream);

4. Chaining the Streams:

Chaining is a mechanism to connect the output of one stream to another stream and create a chain of multiple stream operations. It is normally used with piping operations.

const fs = require("fs");

const zlib = require('zlib');

// Compress the file input.txt to input.txt.gz

fs.createReadStream('input.txt')

.pipe(zlib.createGzip())

.pipe(fs.createWriteStream('input.txt.gz'));

console.log("File Compressed.");

Node.js multithreading: Worker threads and why they matter

10 min read

Editor’s note: This post was updated on 18 January 2022 to include some new information about the Web Workers API and web workers in general, improve and add definitions of key terms, and reflect stable support for the worker_threads module.

Since the release of Node.js v10.5.0, there’s a new worker_threads module available, which has been stable since Node.js v12 LTS.

What exactly is this Worker thread module, and why do we need it? In this post, we will discuss Worker threads alongside:

- The historical reasons concurrency is implemented in JavaScript and Node.js

- The problems we might find and their current solutions

- The future of parallel processing with Worker threads

The history of single-threaded JavaScript

JavaScript was conceived as a single-threaded programming language that ran in a browser. Being single-threaded means that only one set of instructions is executed at any time in the same process (the browser, in this case, or just the current tab in modern browsers).

This made things easier for developers because JavaScript was initially a language that was only useful for adding interaction to webpages, form validations, and so on — nothing that required the complexity of multithreading.

Ryan Dahl saw this limitation as an opportunity when he created Node.js. He wanted to implement a server-side platform based on asynchronous I/O to avoid a need for threads and make things a lot easier.

But concurrency can be a very hard problem to solve. Having many threads accessing the same memory can produce race conditions that are very hard to reproduce and fix.

Is Node.js single-threaded?

Our Node.js applications are only sort of single-threaded, in reality. We can run things in parallel, but we don’t create threads or sync them. The virtual machine and the operating system run the I/O in parallel for us, and when it’s time to send data back to our JavaScript code, it’s the JavaScript that runs in a single thread.

In other words, everything runs in parallel except for our JavaScript code. Synchronous blocks of JavaScript code are always run one at a time:

let flag = false

function doSomething() {

flag = true

// More code (that doesn't change `flag`)...

// We can be sure that `flag` here is true.

// There's no way another code block could have changed

// `flag` since this block is synchronous.

}

This is great if all we do is asynchronous I/O. Our code consists of small portions of synchronous blocks that run fast and pass data to files and streams, so our JavaScript code is so fast that it doesn’t block the execution of other pieces of JavaScript.

A lot more time is spent waiting for I/O events to happen than JavaScript code being executed. Let’s see this with a quick example:

db.findOne('SELECT ... LIMIT 1', function(err, result) {

if (err) return console.error(err)

console.log(result)

})

console.log('Running query')

setTimeout(function() {

console.log('Hey there')

}, 1000)

Maybe this database query takes a minute, but the “Running query” message will be shown immediately after invoking the query. And we will see the “Hey there” message a second after invoking the query regardless of whether the query is still running or not.

Our Node.js application just invokes the function and does not block the execution of other pieces of code. It will get notified through the callback when the query is done, and we will receive the result.

The need for threads to perform CPU-intensive tasks

What happens if we need to do synchronous-intense stuff, such as making complex calculations in memory in a large dataset? Then we might have a synchronous block of code that takes a lot of time and will block the rest of the code.

Imagine that a calculation takes 10 seconds. If we are running a web server, that means that all of the other requests get blocked for at least 10s because of that calculation. That’s a disaster; anything more than 100ms could be too much.

JavaScript and Node.js were not meant to be used for CPU-bound tasks. Since JavaScript is single-threaded, this will freeze the UI in the browser and queue any I/O events in Node.js.

Going back to our previous example, imagine we now have a query that returns a few thousand results and we need to decrypt the values in our JavaScript code:

db.findAll('SELECT ...', function(err, results) {

if (err) return console.error(err)

// Heavy computation and many results

for (const encrypted of results) {

const plainText = decrypt(encrypted)

console.log(plainText)

}

})

We will get the results in the callback once they are available. Then, no other JavaScript code is executed until our callback finishes its execution.

Usually, as we said before, the code is minimal and fast enough, but in this case, we have many results and we need to perform heavy computations on them. This might take a few seconds, and any other JavaScript execution will be queued during that time, which means we might be blocking all our users during that time if we are running a server in the same application.

Why we will never have multithreading in JavaScript

So, at this point, many people might think our solution should be to add a new module in the Node.js core and allow us to create and sync threads.

But that isn’t possible.If we add threads to JavaScript, then we are changing the nature of the language. We cannot just add threads as a new set of classes or functions available — we’d probably need to change the language to support multithreading. Languages that currently support it have keywords such as synchronized in order to enable threads to cooperate.

It’s a shame we don’t have a nice way of solving this use case in a mature server-side platform such as Node.js. In Java, for example, even some numeric types are not atomic; if you don’t synchronize their access, you could end up having two threads change the value of a variable.

The result would be that, after both threads have accessed the variable, it has a few bytes changed by one thread and a few bytes changed by the other thread — and, thus, will not result in any valid value.

The naive solution: Synchronous code-splitting

Node.js won’t evaluate the next code block in the event queue until the previous one has finished executing. So, one simple thing we can do is split our code into smaller synchronous code blocks and call setImmediate(callback) to tell Node.js we are done. This way, it can continue executing things that are pending in the queue; or, in other words, it can move on to the next iteration (or “tick”) of the event loop.

Let’s see how we can refactor some code from our previous example to take advantage of this. Imagine we have a large array that we want to process, and every item in the array requires CPU-intensive processing:

const arr = [/*large array*/]

for (const item of arr) {

// do heavy stuff for each item on the array

}

// code that runs after the whole array is executed

Like we said before, if we do this, it will take too much time to process the whole array and the rest of the JavaScript execution will be blocked. Let’s split this into smaller chunks and use setImmediate(callback):

const crypto = require('crypto')

const arr = new Array(200).fill('something')

function processChunk() {

if (arr.length === 0) {

// code that runs after the whole array is executed

} else {

console.log('processing chunk');

// pick 10 items and remove them from the array

const subarr = arr.splice(0, 10)

for (const item of subarr) {

// do heavy stuff for each item on the array

doHeavyStuff(item)

}

// Put the function back in the queue

setImmediate(processChunk)

}

}

processChunk()

function doHeavyStuff(item) {

crypto.createHmac('sha256', 'secret').update(new Array(10000).fill(item).join('.')).digest('hex')

}

// This is just for confirming that we can continue

// doing things

let interval = setInterval(() => {

console.log('tick!')

if (arr.length === 0) clearInterval(interval)

}, 0)

Now, we can process 10 items each time the event loop runs and call setImmediate(callback) so that if there’s something else the program needs to do, it will do it in between those chunks of 10 items. I’ve added a setInterval() for demonstrating exactly that.

More great articles from LogRocket:

- Don't miss a moment with The Replay, a curated newsletter from LogRocket

- Learn how LogRocket's Galileo cuts through the noise to proactively resolve issues in your app

- Use React's useEffect to optimize your application's performance

- Switch between multiple versions of Node

- Discover how to animate your React app with AnimXYZ

- Explore Tauri, a new framework for building binaries

- Advisory boards aren’t just for executives. Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

As you can see, the code gets more complicated. And many times, the algorithm is a lot more complex than this, so it’s hard to know where to put the setImmediate() to find a good balance. Besides, the code now is asynchronous, and if we depend on third-party libraries, we might not be able to split the execution into smaller chunks.

Running parallel processes in the background, without threads

So, setImmediate() is sufficient for some simple use cases, but it’s far from an ideal solution. Can we do parallel processing without threads? Yes!

What we need is some kind of background processing, a way of running a task with input that could use whatever amount of CPU and time it needs to return a result back to the main application. Something like this:

// Runs `script.js` in a new environment without sharing memory.

const service = createService('script.js')

// We send an input and receive an output

service.compute(data, function(err, result) {

// result available here

})

The reality is that we can already do background processing in Node.js: we can fork the process and do exactly that using message passing, which you can imagine as simply as passing a message from one process to another. This achieves the following goals:

- The main process can communicate with the child process by sending and receiving events

- No memory is shared

- All the data exchanged is “cloned,” meaning that changing it in one side doesn’t change it on the other side

- If we don’t share memory, we don’t have race conditions, and we don’t need threads!

Well, hold on. This is a solution, but it’s not the ideal solution. Forking a process is expensive and slow — it means running a new virtual machine from scratch and using a lot of memory, since processes don’t share memory.

Can we reuse the same forked process? Sure, but sending different heavy, synchronously-executed workloads inside the forked process creates two problems:

- You may not blocking the main app, but the forked process will only be able to process one task at a time

- If one task crashes the process, it will leave all tasks sent to the same process unfinished

If you have two tasks — one that will take 10s and one that will take 1s, in that order — it’s not ideal to have to wait 10s to execute the second task. It’s even less ideal if that task never reaches execution because another process got in its way.

Since we are forking processes, we want to take advantage of our OS’s scheduling and all the cores of our machine. The same way you can listen to music and browse the internet at the same time, you can fork two processes and execute all the tasks in parallel.

In order to fix these problems, we need multiple forks, not only one. But we need to limit the number of forked processes because each one will have all the virtual machine code duplicated in memory, meaning a few MBs per process and a non-trivial boot time.

Using worker-farm to pool threads

Like with database connections, we need a pool of processes that are ready to be used, run a task at a time in each one, and reuse the process once the task has finished. This looks complex to implement, and it would be if we were building it from scratch!

Let’s use worker-farm to help us out instead:

// main app

const workerFarm = require('worker-farm')

const service = workerFarm(require.resolve('./script'))

service('hello', function (err, output) {

console.log(output)

})

// script.js

// This will run in forked processes

module.exports = (input, callback) => {

callback(null, input + ' ' + world)

}

So, problem solved? Well, while we have solved the problem, we are still using a lot more memory than a multithreaded solution.

Threads are still very lightweight in terms of resources compared to forked processes. This is the reason why Worker threads were born.

What are Worker threads?

Worker threads have isolated contexts. They exchange information with the main process using message passing, so we avoid the race conditions problem regular threads have! But they do live in the same process, so they use a lot less memory.

You can share memory with Worker threads and pass ArrayBuffer or SharedArrayBuffer objects that are specifically meant for that. Only use them if you need to do CPU-intensive tasks with large amounts of data. Some examples of CPU-intensive tasks with Node workers are discussed in this article. They allow you to avoid a need for data serialization.

Using Worker threads for multiple tasks

You can start using the worker_threads module today if you run Node.js ≥ v10.5.0. If you’re using any version ≤ 11.7.0, however, you need to enable it by using the --experimental-worker flag when invoking Node.js.

Keep in mind that creating a Worker — even though it’s a lot cheaper than forking a process — can also use too many resources depending on your needs. In that case, the docs recommend you create a pool of Workers.

You can probably look for a generic pool implementation or a specific one in npm instead of creating your own pool implementation. Node.js provides AsyncResource to provide proper async tracking of a worker pool.

Let’s see a simple example. First, we’ll implement the main file, create a Worker thread, and give it some data. The API is event-driven, but I’m going to wrap it into a promise that resolves in the first message received from the Worker:

// index.js

// run with node --experimental-worker index.js on Node.js 10.x

const { Worker } = require('worker_threads')

function runService(workerData) {

return new Promise((resolve, reject) => {

const worker = new Worker('./service.js', { workerData });

worker.on('message', resolve);

worker.on('error', reject);

worker.on('exit', (code) => {

if (code !== 0)

reject(new Error(`Worker stopped with exit code ${code}`));

})

})

}

async function run() {

const result = await runService('world')

console.log(result);

}

run().catch(err => console.error(err))

As you can see, this is as easy as passing the filename as an argument and the data we want the Worker to process. Remember that this data is cloned and is not in any shared memory.

When we’re finished, we wait for the Worker thread to send us a message by listening to the message event. Implement the service:

const { workerData, parentPort } = require('worker_threads')

// You can do any heavy stuff here, in a synchronous way

// without blocking the "main thread"

parentPort.postMessage({ hello: workerData })

Here, we need two things: the workerData that the main app sent to us and a way to return information to the main app. This is done with the parentPort that has a postMessage method where we will pass the result of our processing.

That’s it! This is the simplest example, but we can yet build more complex things — for example, we could send multiple messages from the Worker thread indicating the execution status if we need to provide feedback.

Or we can send partial results. Imagine that you are processing thousands of images. Maybe you want to send a message per image processed, but you don’t want to wait until all of them are processed.

In order to run the example, remember to use the --experimental-worker flag if you are using any version prior to Node.js 11.7:

node --experimental-worker index.js

For additional information, check out the worker_threads documentation.

What is the Web Workers API?

Maybe you’ve heard of the Web Workers API. The API is different from worker_threads because the needs and technical conditions are different, but they can solve similar problems in the browser runtime.

The Web Workers API is more mature and is well supported by modern browsers. It can be useful if you are doing crypto mining, compressing/decompressing, image manipulation, computer vision (e.g., face recognition), etc. in your web application.

Introducing: Partytown

Web workers can also be used to run third-party scripts. Running heavy scripts from the main thread can cause UX issues on your site, which isn’t ideal, but running a script apart from the main thread can create issues, as we directly don’t have access to the main thread APIs like window, document, or localStorage.

Here’s where Partytown comes in. Partytown is a lazy-loaded, 6kB package that helps you solve the issue mentioned. Your third-party packages will run as expected without affecting the main thread. If you’re interested in trying this out, see our other post exploring the library, or check out their documentation for a more in-depth discussion.

Conclusion

worker_threads is an exciting and useful module if you need to do CPU-intensive tasks in your Node.js application. They provide the same behavior as threads without sharing memory and, thus, avoid the potential race conditions threads introduce. Since the worker_threads module became stable in Node.js v12 LTS, you should feel secure using it in production-grade apps!

When you’re using scrolling through Instagram on your phone, the app sends some requests to its server. Their server receives the request, processes it, and returns a response to your phone. The app on your phone processes the response and presents it to you in a readable manner. Here, the app on your phone talks to Instagram’s servers via what we call Application Programming Interface or APIs.

Let’s take another example to understand APIs. You must have heard of UPI payments and apps like GPay, PhonePe, and Paytm which allow you to do transactions via UPI. The UPI payment system is managed by NPCI or National Payments Corporation of India which exposes its APIs so that these payment apps can use them and facilitate UPI transactions to their customers. Simply put, an API is a way for two or more software systems to communicate with each other.

The application sending the request is commonly referred to as a client and the application sending the response is called the server. So, in the above example, the app on your device is the client which requests data from Instagram’s servers.

The way an API works is that the client sends some request to the server at a particular endpoint, with some payload using one of the HTTP methods; the server processes the request and returns a response which can be in HTML, XML, JSON, etc.

https://www.scaler.com/topics/nodejs/node-js-rest-api/

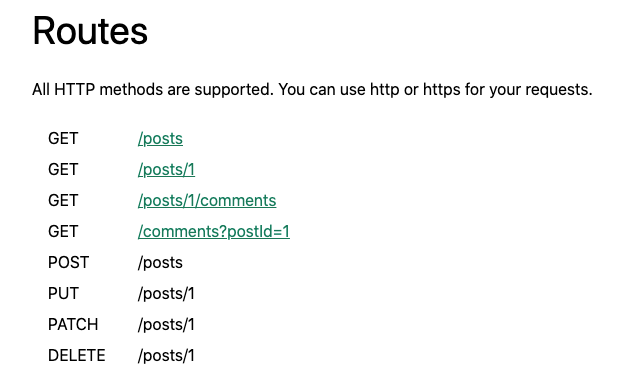

Q. Explain RESTful Web Services in Node.js?

REST stands for REpresentational State Transfer. REST is web standards based architecture and uses HTTP Protocol. It is an architectural style as well as an approach for communications purposes that is often used in various web services development. A REST Server simply provides access to resources and REST client accesses and modifies the resources using HTTP protocol.

HTTP methods:

GET− Provides read-only access to a resource.PUT− Updates an existing resource or creates a new resource.DELETE− Removes a resource.POST− Creates a new resource.PATCH− Update/modify a resource

Example: users.json

{

"user1" : {

"id": 1,

"name" : "Ehsan Philip",

"age" : 24

},

"user2" : {

"id": 2,

"name" : "Karim Jimenez",

"age" : 22

},

"user3" : {

"id": 3,

"name" : "Giacomo Weir",

"age" : 18

}

}List Users ( GET method)

Let's implement our first RESTful API listUsers using the following code in a server.js file −

const express = require('express');

const app = express();

const fs = require("fs");

app.get('/listUsers', function (req, res) {

fs.readFile( __dirname + "/" + "users.json", 'utf8', function (err, data) {

console.log( data );

res.end( data );

});

})

const server = app.listen(3000, function () {

const host = server.address().address

const port = server.address().port

console.log("App listening at http://%s:%s", host, port)

});Add User ( POST method )

Following API will show you how to add new user in the list.

const express = require('express');

const app = express();

const fs = require("fs");

const user = {

"user4" : {

"id": 4,

"name" : "Spencer Amos",

"age" : 28

}

}

app.post('/addUser', function (req, res) {

// First read existing users.

fs.readFile( __dirname + "/" + "users.json", 'utf8', function (err, data) {

data = JSON.parse( data );

data["user4"] = user["user4"];

console.log( data );

res.end( JSON.stringify(data));

});

})

const server = app.listen(3000, function () {

const host = server.address().address

const port = server.address().port

console.log("App listening at http://%s:%s", host, port)

})Delete User:

const express = require('express');

const app = express();

const fs = require("fs");

const id = 2;

app.delete('/deleteUser', function (req, res) {

// First read existing users.

fs.readFile( __dirname + "/" + "users.json", 'utf8', function (err, data) {

data = JSON.parse( data );

delete data["user" + 2];

console.log( data );

res.end( JSON.stringify(data));

});

})

const server = app.listen(3000, function () {

const host = server.address().address

const port = server.address().port

console.log("App listening at http://%s:%s", host, port)

})1. req.body

Generally used in POST/PUT requests.

Use it when you want to send sensitive data(eg. form data) or super long JSON data to the server.

How to send data in request body

- using curl

curl -d '{"key1":"value1", "key2":"value2"}' -H "ContentType: application/json" -X POST http://localhost:3000/giraffe

- using axios

axios.post('/giraffe', {

key1: 'value1',

key2: 'value2'

})

.then(response => {

...

})

.catch(error => {

...

})

How to get data from request body

app.get('/giraffe', (req, res) => {

console.log(req.body.key1) //value1

console.log(req.body.key2) //value2

})

Remember to use express.json() middleware to parse request body else you'll get an error

app.use(express.json())

2. req.params

These are properties attached to the url i.e named route parameters. You prefix the parameter name with a colon(:) when writing your routes.

For instance,

app.get('/giraffe/:number', (req, res) => {

console.log(req.params.number)

})

To send the parameter from the client, just replace its name with the value

GET http://localhost:3000/giraffe/1

3. req.query

req.query is a request object that is populated by request query strings that are found in a URL. These query strings are in key-value form. req.query is mostly used for searching,sorting, filtering, pagination, e.t.c

Say for instance you want to query an API but only want to get data from page 10, this is what you'd generally use.

It written as key=value

GET http://localhost:3000/animals?page=10

To access this in your express server is pretty simple too;

app.get('/animals', ()=>{

console.log(req.query.page) // 10

})

I hope you found this helpful.

What is the difference between req.params and req.query?

The req.params are a part of a path in URL and they're also known as URL variables. for example, if you have the route /books/:id, then the id property will be available as req.params.id. req.params default value is an empty object {}.

A req.query is a part of a URL that assigns values to specified parameters. A query string commonly includes fields added to a base URL by a Web browser or other client application, for example as part of an HTML form. A query is the last part of URL

Example 01: req.params

/**

* req.params

*/

// GET http://localhost:3000/employees/10

app.get('/employees/:id', (req, res, next) => {

console.log(req.params.id); // 10

})